Usability Testing Before Launch: How to Watch Real Users Interact with Your Website

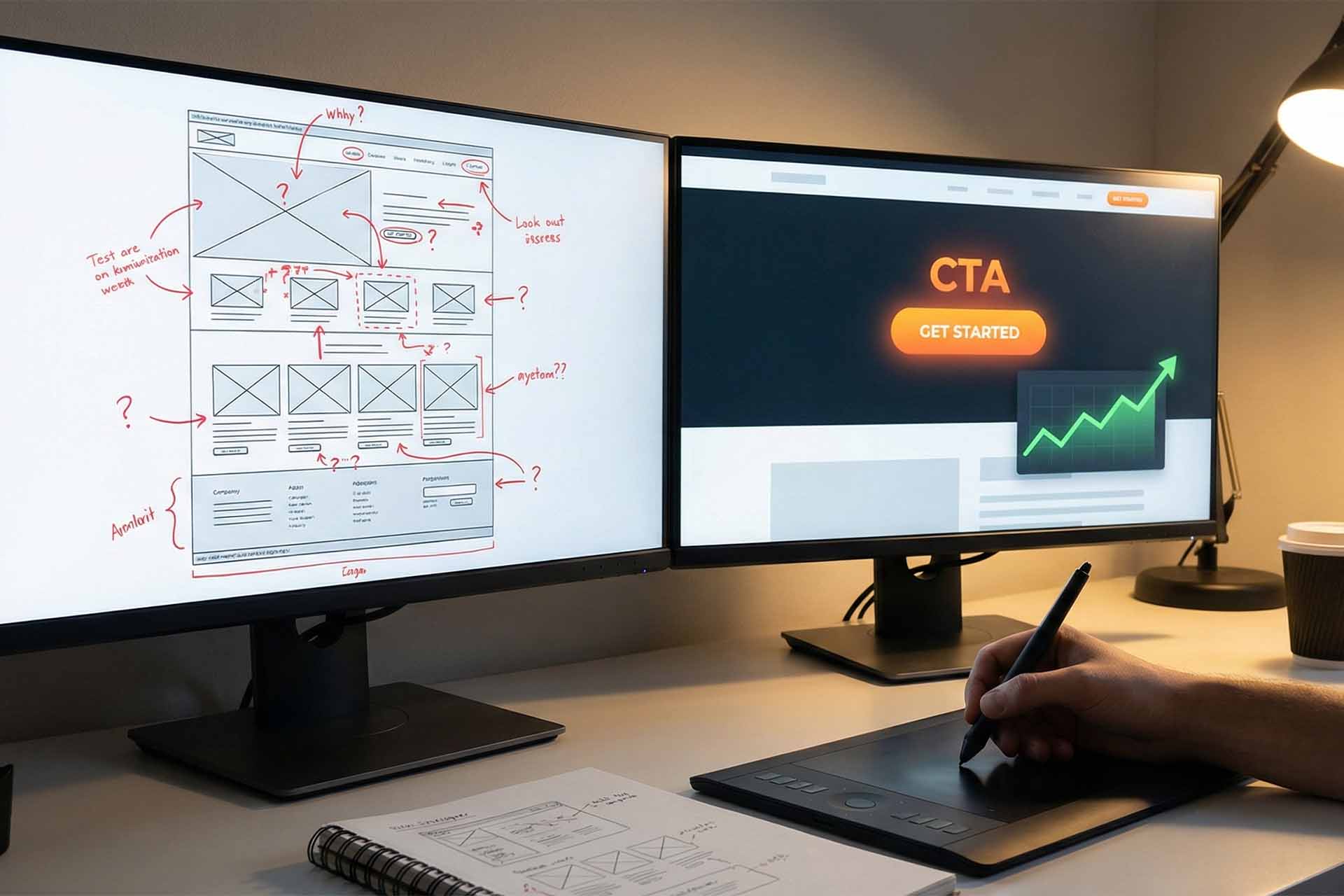

Before hitting the “launch” button, there’s a crucial step that can determine the success or failure of your project: Usability Testing.

At Loop Media, we see it as the magnifying glass that reveals how real users navigate your website — exposing issues you might never notice on your own.

Why Usability Testing Is Essential Before Launch

1. Uncover Real Problems

What feels intuitive to you as a designer or developer may be confusing to users.

Usability testing exposes navigation issues, unclear buttons, or missing information that can frustrate visitors.

2. Save Time and Money

Fixing issues before launch is significantly cheaper than after. Post-launch changes often require re-coding, downtime, and user trust recovery.

3. Enhance User Satisfaction

A seamless, enjoyable experience keeps visitors engaged and loyal. The easier your site is to use, the more likely users are to return and convert.

4. Boost Conversion Rates

If users can’t find the “Buy” or “Sign Up” button, conversions will suffer. Usability testing ensures the conversion path is clear, direct, and free of barriers.

5. Understand Real Human Behavior

Analytics show what users do, but usability testing explains why they do it. Observing real interactions gives you deep insights into user thought processes.

How to Watch Real Users Interact with Your Website

There are several usability testing methods, each with different costs and levels of depth.

1. Moderated Usability Testing

A facilitator sits with a user (in person or via video call) and asks them to perform specific tasks.

The facilitator observes, records notes, and asks clarifying questions.

Though more time-consuming, it provides powerful qualitative insights into user behavior.

2. The Five-Second Test

Show a page to users for only five seconds, then ask: “What do you remember?” or “What is this page about?”

This quick test measures first impressions and message clarity.

However, it doesn’t assess deeper interaction patterns.

3. A/B Testing

Two different versions of a page are shown to separate groups of users.

Metrics like clicks or conversions are compared to determine which version performs better.

A/B testing provides strong quantitative data but doesn’t explain why users prefer one version.

Tips for Running an Effective Usability Test

- Define the exact problems you want to solve and tasks users should complete.

- Recruit participants that closely match your target audience.

- Create realistic scenarios that reflect real-world interactions.

- Ask users to think aloud while performing tasks to capture their thoughts.

- Avoid helping or guiding them — observe natural challenges.

- Record screens, audio, and facial reactions (with consent) for later analysis.

- After the session, look for recurring patterns and prioritize fixes accordingly.

Loop Media’s Insight

At Loop Media, we believe usability testing isn’t an extra step — it’s an investment that guarantees your product meets user expectations.

Every minute spent observing a real user before launch saves hours of frustration and fixes later.

Because every successful website begins with one key moment: watching users truly understand it.

العربية

العربية